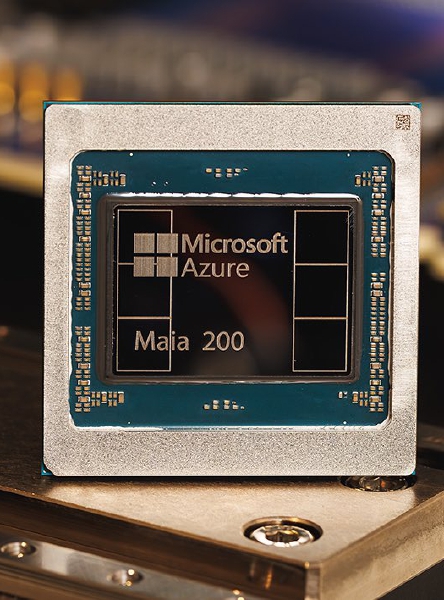

NEW YORK (EFE).— Yesterday Microsoft presented Maia 200, the second generation of its artificial intelligence (AI) chip with which it seeks to reduce dependence on Nvidia and compete against the developments of Google and Amazon in the cloud.

The new model is presented two years after its first version, the Maia 100, which was not available to customers.

“It is the most efficient inference system Microsoft has ever deployed,” said Scott Guthrie, Microsoft executive vice president for cloud and AI, in a blog post.

According to the company, the Maia 200 is designed as an inference processor and promises up to 30% more performance per dollar than current Microsoft hardware. Additionally, it is already being deployed in Azure data centers in the United States to power services such as Microsoft 365 Copilot, Foundry, and OpenAI’s recent GPT models.

Additionally, these chips are being integrated into data centers in the central region of the United States and will later reach those in the West and other locations. The chips use Taiwan Semiconductor Manufacturing Co.’s three-nanometer process.

The technology company’s announcement is part of the race to lead generative AI. Large cloud providers are struggling to develop their own microcircuit that does not depend exclusively on Nvidia, which leads the market.

The launches of Amazon, Google and Microsoft are helping to increase competition in a booming market.

Related

You may also like

-

The artificial intelligence that could end apps and subscriptions

-

Netflix revolutionizes: New app with vertical videos, already in testing!

-

Samsung Galaxy S26 Ultra leaked!: See the real photos and discover its release date

-

iPhone 18 Pro: Leaks reveal a design focused on power

-

YouTube transforms: CEO reveals “AI Slop” problems and promises solutions